This article is a quick primer on Docker for those who have heard of it but have never actually worked with it. Even if you have some experience working with it, this article will provide you with tips or context that will help understand Docker. But also how to use it effectively.

Why use Docker

In short, Docker makes application development, packaging, and deployment easier. But, why do we need these things to be easier as developers? First, let’s look at some problems that might occur in typical environment without Docker.

Applications and their dependencies

Each application we develop has its own requirements and dependencies. For example:

- Application A needs version X for library Y, while application B might need version Z for that same library.

- Maybe our application stores data in a database like PostgreSQL or MongoDB or Elastic.

- Certain environment variables need specific values.

- Files need to be present.

This can cause all kinds of environment management frustration and problems.

Development

When we develop, we usually develop applications on our own system. We can manually configure and install everything to our needs. That might take some work but, then we have everything set up perfectly for the application we work on. All the dependencies and files are there. Everything is the correct version. Everything works, for the moment.

The environment might be fine at the moment, but what if start development for another application? Does it use the same dependencies? Will it use different versions? For example, we might want to work with the latest versions of libraries and do an update. If we update we might break our older applications. There could be environment variables that use the same names? Files, etc.

When we’re done developing an application we might want to clean up all the clutter of things we have installed. In the case of a shared environment, we have to be careful not to clean up things other applications depend on.

All these thing might be somewhat manageable on our own system. However, at some point we will want to deploy our application somewhere else. For example, to the cloud or a client’s machine.

Packaging and deployment

We will run into similar problems deploying to other systems. Usually, we don’t know what the target system looks like. Even if we do know, things might change on the system at some point. The application might even be delivered to a different system than we thought.

And, again, we might run into problems where multiple applications are running with overlapping dependencies. These dependencies could have versions incompatible with our application.

For that reason, we would have to manage the configuration on the target system. Somehow at the time of deployment. Or make our application compatible with the versions of the dependencies on the target system.

Also, some other piece of software can interfere with our application. For example by altering files or environment variables that our application requires. What happens if the other application crashes and corrupts memory or a file, or resources get locked? Our application could stop working. What if it is our application that crashes? We don’t want to be responsible for the whole system going down.

The solution

Docker offers the solution. It allows us to build and package the environment with the application. This means, we can package the operating system (OS), the required dependencies and configurations, and the application itself all into one package. This package is called a “container image”. Then, we can distribute and deploy that container image to the target system(s).

Docker runs the container image as an isolated environment so it can’t affect anything outside of its environment.

The result is that the environment the application runs in is the same everywhere. Because it is predefined. Thanks to that we never have to worry about what the target system looks like, as long as it can run Docker container images.

Solution for development

In the case of our development environment, docker helps there too. And not only by isolating environments when developing multiple applications at the same time.

Another benefit is that there are Docker images that allow us to run pre-configured environments for spinning up databases and other kinds of applications. Doing that we can simulate the environment own application will run in on our local machines. That allows us to do integration testing before we deploy on the target system.

For example, if the application is to integrate with a MSSQL database, we can pull and run an official pre-made container image that contains a MSSQL database. In the start-up script of the container, we can drop en recreate the databases and tables so that we always start with a clean slate for our tests.

The isolation of Docker container images also makes them easy to clean up. Simply delete the image. Therefore, we don’t have to worry about clutter forming on our development machine.

Solution for packaging and deployment

As mentioned, the container image contains all the configuration and software dependencies that the application needs to run. All this is defined in a simple standardized format. When deploying there is no worry about the state of the target system.

For example, target systems are usually “hardened” security-wise. This means that they often are no able to access sources outside of their direct network, like package repositories. Package repositories like those for Python, Javascript, Rust, etc. With container images everything is pre-installed so there is no need to worry about being able to access outside sources or not.

Let’s look at what docker is in more detail in the next section.

What is Docker

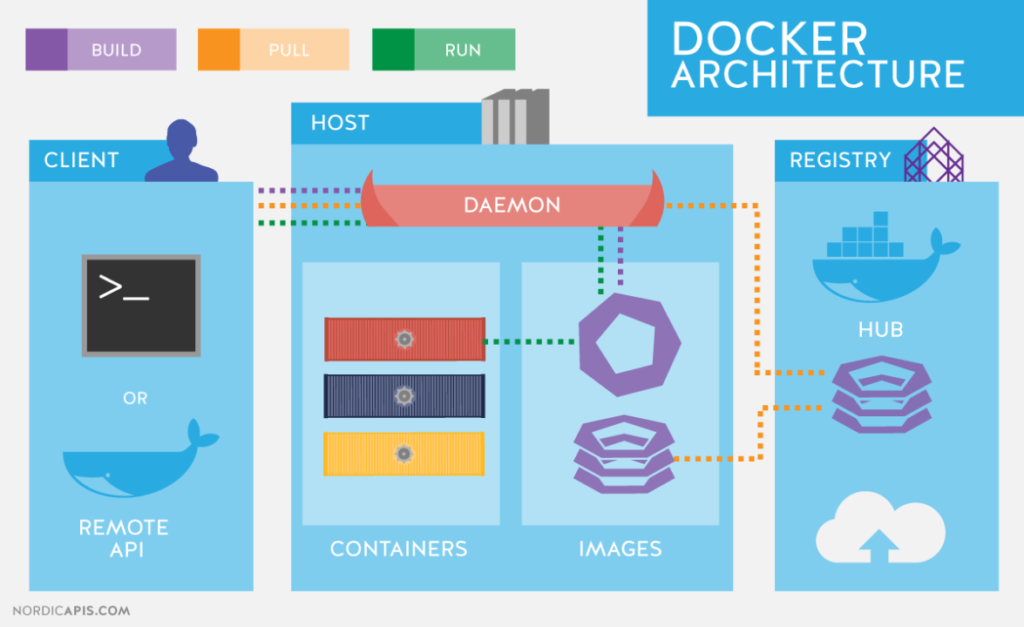

So what is Docker? As mentioned before, Docker is a platform for developing, shipping, and running applications. The platform does this by building container images, and running those in containers on the Docker engine on the host machine. Container images can be uploaded to and downloaded from a container registry.

Container image

Using Docker we can package our applications into container images. Such a container image contains an OS, configuration files, libraries, and the application. Typically the OS is some flavor of Linux, since Linux images can be very small. The more stripped down the better, for upload and download reasons, but also for security reasons. Fewer things installed means less vulnerabilities.

Container images are stored in a container registry, in a repository. The repository has the name of the container image.

Containers

Containers are used to run container images. A container is an isolated environment that executes the container image. Because a container is an isolated environment, it is possible to run multiple container instances of the same container image without problems.

A container is similar to a virtual machine (VM), in that it is an isolated system. However, containers virtualize the OS, not the machine. This means that containers use a lot less resources from the host system. Using less resources makes it possible to run many more containers than if they were VMs.

Container registry

The container registry is where container images are stored. There are public container registries but also private ones. Many of the major cloud providers like Microsoft Azure, Amazon Web Services, and Google Cloud Platform provide container registry resources that integrate with other resources offered by these platforms.

The container registry facilitates easy distribution of the container images within your environment. A system simply needs access to the registry and then it can download and run the container image it needs.

How to use Docker

In this section, we will briefly go over a simple example of how to define a container image, build a container image, and run a container image.

First, to use Docker we need to install the Docker software. Find instructions here.

Defining a container image

When creating a container image, more often than not we will use a publicly available image from DockerHub as a base.

On DockerHub we can find all kinds of distributions. For example, if we wanted to create a container image for a project written in the Rust programming language, we could use the official Rust image as a base. Of course, there are also images aimed at other programming languages like Python, or NodeJs.

Container images are defined using a Dockerfile. Here is a very simple Rust example:

FROM rust:1-alpine3.14 RUN apk update && apk upgrade COPY . . RUN cargo install --path . CMD ["test-docker"]

This example simply copies the entire project directory contents, then builds the code and installs it (all in one command). Then executes the resulting executable.

- FROM: Indicates what image to base this on.

- RUN: Runs the line on the command line during image build. In the above example this is just to install updates to software that is part of the image.

- COPY: Copies files from outside the image to inside the image.

- CMD: executes a command when the container starts as opposed to when the image is built. In this case it is the built and installed executable from the Rust project.

Building a container image

The image can be built using the docker build command:

docker build -t rust-test-docker:latest .

The above command will build an image using the Dockerfile in the current directory as indicated by .. It will name the image rust-test-docker and tag it with latest. If an image is run and a version tag is not specified it Docker will default to using the image tagged latest.

It is a good idea to use version numbers for tags. Especially when distributing and deploying images to other systems:

docker build -t rust-test-docker:1.0.0

Docker will keep track of what has been built. Each line in the Dockerfile corresponds to a step, or a layer in the container image. When building the image again without changes the process will finish instantly because there are no changes. Changing a line in the Dockerfile, or when files being copied by the Dockerfile change, everything from that line and on will be rebuilt. Anything preceding that changed line will not have to be rebuilt thanks to Docker’s layered file system.

Running a container image

Now that we have a container image built we can start a container and run it with the following command:

docker run rust-test-docker:latest

It is that simple. After executing the docker run command a container will be started to run our container image. The container will then run until completion. In the case of a webserver or a database image, for example, the container will run indefinitely unless it is manually stopped or it crashes. Any number of instances of the container image can be run in containers on the same machine.

Starting containers by hand is fine on your local machine, if there are one or two. But what if your application is a microservice architecture with a dozen different components? How do we easily manage multi-container projects?

Multi-container projects

What if our application consists of multiple components, and/or what if we want multiple instances of our backend application container to handle heavy user traffic? How do we manage that?

That’s where container management systems come in to help. These systems allow us to specify the configuration of your environment and easily start and stop the entire environment. For example, we can specify exactly which container images to use, where they are located, how many instances of each container, what ports they should listen on, etc. etc.

Well-known free systems:

- Docker in swarm mode: Current versions of Docker include swarm mode for natively managing a cluster of Docker Engines called a swarm. Use the Docker CLI to create a swarm, deploy application services to a swarm, and manage swarm behavior.

- Docker compose: Compose is a tool for defining and running multi-container Docker applications. With Compose, you use a YAML file to configure your application’s services. Then, with a single command, you create and start all the services from your configuration.

- Kubernetes: Kubernetes is a portable, extensible, open-source platform for managing containerized workloads and services, that facilitates both declarative configuration and automation. It has a large, rapidly growing ecosystem. Kubernetes services, support, and tools are widely available.

Going into detail about these systems is out of scope for this article.

Conclusion

We now have a basic understanding of why Docker is useful and how easy it is to use it. The reason why Docker is popular should be clear. Docker’s method of isolating the application and packaging every dependency and configuration solves a lot of the problems typically encountered in a development and deploy to production workflow.

For a more detailed example of how to build a Docker file, I have an article with an example for a Python Flask application: Dockerized Flask RESTful API: how to.

Please follow me on Twitter to keep up to date with upcoming software development related articles:

Follow @tmdev82